Implementing the 3-2-1 backup strategy using Borg

I have been running a home server for a couple of years now, since seizing the opportunity to put something together with spare parts after building a new workstation.

One of the first services I installed was Immich, the self-hosted photo and video management solution, with the dream of finally moving away from Google Photos.

I have been trialing the software ever since. It is fantastic, although warning: it is still under heavy development. The developers are aiming for a stable release within 2025, I believe.

My first stab at implementing backups for my Immich data, consisted of utilising the zfs-auto-snapshot tool for ZFS snapshot generation, and running a cron job that would run a basic bash script that would perform incremental zfs send to an external hard disk.

This was only ever meant to be temporary, and before making Immich my full-time photo solution, I wanted a more robust solution.

3-2-1 Backup Strategy

The 3-2-1 backup rule is a simple, effective strategy for keeping your data safe. It consists of:

- Three copies of your data: the original and two more copies.

- Two different media: This made more sense when the rule was created. Today it is enough to have two different devices.

- One copy off-site: One copy of your data in a remote location e.g., cloud storage.

Borg, Borgmatic and BorgBase

The borg CLI is a powerful tool for deduplication-based backups. borgmatic is a config driven wrapper around borg abstracting away some complexity and simplifying the backup process. BorgBase is to borg, as GitHub is to git.

See installation instructions.

Install from PyPi for the latest release. Pre-compiled binaries on their GitHub release page are discontinued after v1.2.9. Distribution packages are very outdated also.

I started by creating a remote borg repository on BorgBase, at the time of writing I was able to provision 250GB for $24 (billed annually)—which was perfect for me (my current Immich data totals ~210GB). The plan is flexible up to 1TB - $0.01/GB/Month for storage outside the base quota.

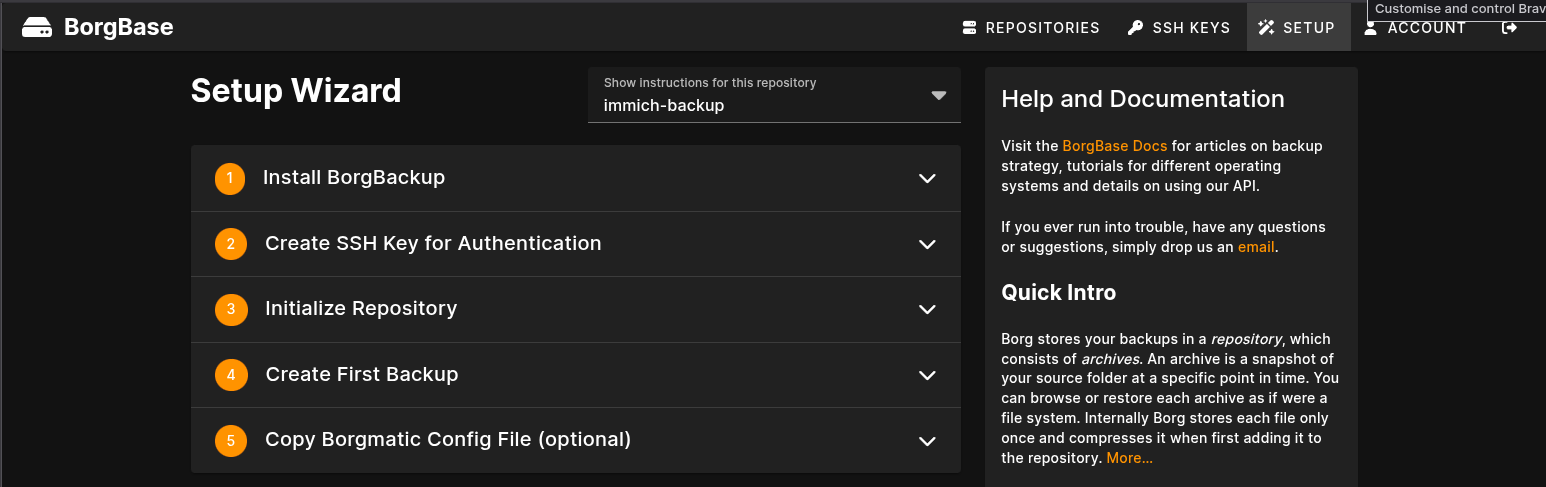

BorgBase provides a setup wizard that gives step by step instructions to complete the repository initialization, SSH key setup, and even provides a pre-populated borgmatic config.yml file to get you up and running quickly.

A basic /etc/borgmatic/config.yml file looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

# A list of directories to back up

source_directories:

- /path/to/data/

# List of borg repositories to store backups

repositories:

- path: ssh://<something>@<repo-name>.repo.borgbase.com/./repo

label: "backup on BorgBase"

- path: /path/to/backup/directory

label: "backup on external hard disk"

encryption: repokey-blake2

exclude_patterns:

- '*.pyc'

- ~/*/.cache

# Enable ZFS Snapshot Integration for this location.

# This tells borgmatic to automatically handle ZFS snapshots

# for the datasets listed in `source_directories` for this location.

# It will create a snapshot before the backup, use it, and then destroy it.

zfs: {}

# Stay in same file system (optional)

# one_file_system: true

compression: auto,zstd

encryption_passphrase: CHANGEME

archive_name_format: '{hostname}-{now:%Y-%m-%d-%H%M%S}'

# Number of times to retry a failing backup before giving up.

retries: 5

retry_wait: 5

keep_daily: 3

keep_weekly: 4

keep_monthly: 12

# Don't read whole repo each time

skip_actions:

- check

check_last: 3

# Shell commands to execute before or after a backup

commands:

- before: action

when: [create]

run:

- echo "`date` - Starting backup"

- after: action

when: [create]

run:

- echo "`date` - Finished backup"

Run a backup based on the above configuration:

1

2

3

4

5

# Run a backup to each listed repository

borgmatic create

# Run a backup to a specific repository and show progress

borgmatic create --repository "immich-backup on BorgBase" --verbosity 1 --progress

I have been using this setup for a week or so and I have found it to work very well. In 2025 my broadband connection has a paltry 8 Mb/s upload speed, so even though I only have ~210GB of data to backup, the initial backup took ~2.5 days - shameful!

Thankfully, borg is smart enough to only upload new data on subsequent backups.

The source directory configured for backup is linked to a borg repository. borg will create a new archive in the repository for each new backup. Data is stored de-duplicated across the repository, meaning if a long-running backup is interrupted for some reason, then on the next backup to a new archive in the repository, borg can figure out what data is missing rather than uploading everything again.